I ran across an article claiming that F# was about an order of magnitude faster at calculating a basic math algorithm. Can this be true?

Sigmoid in C#

public static float Sigmoid(float value)

{

return 1.0f / (1.0f + (float) Math.Exp(-value));

}

//csc -o -debug-

.method public static float32 Sigmoid(float32 'value') cil managed

{

.maxstack 8

L_0000: ldc.r8 1

L_0009: ldc.r8 1

L_0012: ldarg.0

L_0013: neg

L_0014: conv.r8

L_0015: call float64 [mscorlib]System.Math::Exp(float64)

L_001a: add

L_001b: div

L_001c: conv.r4

L_001d: ret

}

Sigmoid in F#

let Sigmoid value = 1.0f/(1.0f + exp(-value));

//fsc --debug- --optimize+

.method public static float32 Sigmoid(float32 'value') cil managed

{

.maxstack 5

.locals init (

[0] float32 num)

L_0000: ldc.r4 1

L_0005: ldc.r4 1

L_000a: ldarg.0

L_000b: neg

L_000c: stloc.0

L_000d: ldloc.0

L_000e: conv.r8

L_000f: call float64 [mscorlib]System.Math::Exp(float64)

L_0014: conv.r4

L_0015: add

L_0016: div

L_0017: ret

}

The IL generated is nearly the same. The F# version allocates a little less memory using float32 variables on the stack where C# uses float64 and generally manages with a smaller stack, but the basic math operations are the same. Can these differences make an order of magnitude performance difference? Short answer is no.

Benchmarks are Tricky

C# sigmoid algorithm benchmark

// (c) Rinat Abdullin

// http://abdullin.com/journal/2009/1/5/caching-activation-function-is-not-worth-it.html

// sigmoidcs.cs

using System;

using System.Diagnostics;

static class App

{

const float Scale = 320.0f;

const int Resolution = 2047;

const float Min = -Resolution/Scale;

const float Max = Resolution/Scale;

static readonly float[] _lut = InitLut();

static float[] InitLut()

{

var lut = new float[Resolution + 1];

for (int i = 0; i < Resolution + 1; i++)

{

lut[i] = (float) (1.0/(1.0 + Math.Exp(-i/Scale)));

}

return lut;

}

static float Sigmoid1(double value)

{

return (float) (1.0/(1.0 + Math.Exp(-value)));

}

static float Sigmoid2(float value)

{

if (value <= Min) return 0.0f;

if (value >= Max) return 1.0f;

var f = value*Scale;

if (value >= 0) return _lut[(int) (f + 0.5f)];

return 1.0f - _lut[(int) (0.5f - f)];

}

static float TestError()

{

var emax = 0.0f;

for (var x = -10.0f; x < 10.0f; x += 0.000001f)

{

var v0 = Sigmoid1(x);

var v1 = Sigmoid2(x);

var e = Math.Abs(v1 - v0);

if (e > emax) emax = e;

}

return emax;

}

static double TestPerformancePlain()

{

var sw = new Stopwatch();

sw.Start();

for (int i = 0; i < 10; i++)

{

for (float x = -5.0f; x < 5.0f; x += 0.000001f)

{

Sigmoid1(x);

}

}

return sw.Elapsed.TotalMilliseconds;

}

static double TestPerformanceOfLut()

{

var sw = new Stopwatch();

sw.Start();

for (int i = 0; i < 10; i++)

{

for (float x = -5.0f; x < 5.0f; x += 0.000001f)

{

Sigmoid2(x);

}

}

return sw.Elapsed.TotalMilliseconds;

}

static void Main()

{

var emax = TestError();

var t0 = TestPerformancePlain();

var t1 = TestPerformanceOfLut();

Console.WriteLine("Max detected deviation using LUT: {0}", emax);

Console.WriteLine("10^7 iterations using Sigmoid1() took {0} ms", t0);

Console.WriteLine("10^7 iterations using Sigmoid2() took {0} ms", t1);

}

}

F# sigmoid algorithm benchmark

// (c) Rinat Abdullin

// http://abdullin.com/journal/2009/1/6/f-has-better-performance-than-c-in-math.html

// sigmoidfs.fs

#light

let Scale = 320.0f;

let Resolution = 2047;

let Min = -single(Resolution)/Scale;

let Max = single(Resolution)/Scale;

let range step a b =

let count = int((b-a)/step);

seq { for i in 0 .. count -> single(i)*step + a };

let lut = [|

for x in 0 .. Resolution ->

single(1.0/(1.0 + exp(-double(x)/double(Scale))))

|]

let sigmoid1 value = 1.0f/(1.0f + exp(-value));

let sigmoid2 v =

if (v <= Min) then 0.0f;

elif (v>= Max) then 1.0f;

else

let f = v * Scale;

if (v>0.0f) then lut.[int (f + 0.5f)]

else 1.0f - lut.[int(0.5f - f)];

let getError f =

let test = range 0.00001f -10.0f 10.0f;

let errors = seq {

for v in test ->

abs(sigmoid1(single(v)) - f(single(v)))

}

Seq.max errors;

open System.Diagnostics;

let test f =

let sw = Stopwatch.StartNew();

let mutable m = 0.0f;

let result =

for t in 1 .. 10 do

for x in 1 .. 1000000 do

m <- f(single(x)/100000.0f-5.0f);

sw.Elapsed.TotalMilliseconds;

printf "Max deviation is %f\n" (getError sigmoid2)

printf "10^7 iterations using sigmoid1: %f ms\n" (test sigmoid1)

printf "10^7 iterations using sigmoid2: %f ms\n" (test sigmoid2)

PS> csc -o -debug- .\sigmoidcs.cs

Microsoft (R) Visual C# 2010 Compiler version 4.0.30319.1

Copyright (C) Microsoft Corporation. All rights reserved.

PS> fsc --debug- --optimize+ .\sigmoidfs.fs

Microsoft (R) F# 2.0 Compiler build 4.0.30319.1

Copyright (c) Microsoft Corporation. All Rights Reserved.

PS> .\sigmoidcs.exe

Max detected deviation using LUT: 0.001663984

10^7 iterations using Sigmoid1() took 2644.5935 ms

10^7 iterations using Sigmoid2() took 510.9379 ms

PS> .\sigmoidfs.exe

Max deviation is 0.001664

10^7 iterations using sigmoid1: 403.974300 ms

10^7 iterations using sigmoid2: 124.520100 ms

Wow. That’s 404ms for F# and 2,656 for C#. That’s a huge difference. Why?

We already established that the IL for the Sigmoid1() algorithm above compiles to very similar IL. What else could be different?

Wait a minute.

let result =

for t in 1 .. 10 do

for x in 1 .. 1000000 do

m <- f(single(x)/100000.0f-5.0f);

The F# loop is not equivalent to the C# version. In the F# version the counter is an int and the C# version the counter is a float. (Also the F# version is passing a delegate to the test function.)

for (int i = 0; i < 10; i++)

{

for (float x = -5.0f; x < 5.0f; x += 0.000001f)

{

Sigmoid1(x);

}

}

Does that int counter thing make a big difference.

using System;

using System.Diagnostics;

static class App

{

static float Sigmoid1(double value)

{

return (float) (1.0/(1.0 + Math.Exp(-value)));

}

static double TestPerformancePlain()

{

var sw = new Stopwatch();

sw.Start();

float num = 0f;

for (int i = 0; i < 10; i++)

{

for (int j = 1; j < 1000001; j++)

{

num = Sigmoid1((((float) j) / 100000f) - 5f);

}

}

return sw.Elapsed.TotalMilliseconds;

}

static void Main()

{

var t0 = TestPerformancePlain();

Console.WriteLine("10^7 iterations using Sigmoid1() took {0} ms", t0);

}

}

PS> csc -o -debug- .\sigmoid-counter.cs

Microsoft (R) Visual C# 2010 Compiler version 4.0.30319.1

Copyright (C) Microsoft Corporation. All rights reserved.

PS> ./sigmoid-counter

10^7 iterations using Sigmoid1() took 431.6634 ms

Yup C# just executed that Sigmoid1() test in 431ms. Recall that F# was 404ms.

The other difference was that the F# code was passing a delegate of FsharpFunc<float,float> rather than making a method call. Lets try the same thing in C# using a Lambda expression to create an anonymous Func<float,float> delegate.

using System;

using System.Diagnostics;

static class App

{

static double Test(Func<float,float> f)

{

var sw = new Stopwatch();

sw.Start();

float num = 0f;

for (int i = 0; i < 10; i++)

{

for (int j = 1; j < 1000001; j++)

{

num = f((((float) j) / 100000f) - 5f);

}

}

return sw.Elapsed.TotalMilliseconds;

}

static void Main()

{

var t0 = Test( x => { return (float) (1.0/(1.0 + Math.Exp(-x))); } );

Console.WriteLine("10^7 iterations using Sigmoid1() took {0} ms", t0);

}

}

PS> ./sigmoid-counter

10^7 iterations using Sigmoid1() took 431.6634 ms

PS> csc -o -debug- .\sigmoid-lambda.cs

Microsoft (R) Visual C# 2010 Compiler version 4.0.30319.1

Copyright (C) Microsoft Corporation. All rights reserved.

PS> .\sigmoid-lambda.exe

10^7 iterations using Sigmoid1() took 275.1087 ms

Now C# is 130ms faster than F#.

For what it’s worth the C version of the Sigmoid1() benchmark executes in 166ms on my machine using CL 16 x64 on my machine.

- C: 166ms

- C# (delegate): 275ms

- C# (method): 431ms

- C# (method, float counter): 2,656ms

- F#: 404ms

So, what have we learned?

- Never, ever use a float as a counter in a for loop in C#.

- Invoking a delegate in the CLR is slightly faster than a normal method invocation.

- It’s very easy to measure something other than what you intended when benchmarking.

- In as much as this little test means anything, C# and F# have similar performance. C is still faster.

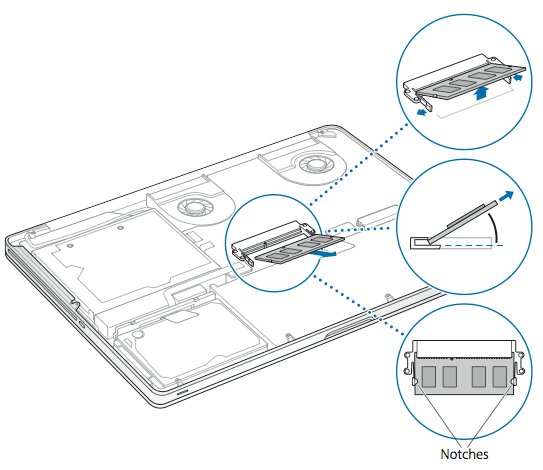

The late 2011 Sandy Bridge 15″ MacBook Pro machines come with 1300MHz 9-9-9 non-ECC DDR3 SO-DIMM RAM configurable up to 8GB in a BTO configuration. The 2012 Ivy Bridge models come with RAM operating at 1600MHz and the Retina MacBook Pro has a 16GB BTO option. The chipsets are similar and I was pretty sure that the non-Retina model can support 16GB of RAM and the Sandy Bridge models can run at the 20% faster 1600MHz just like the Ivy Bridge ones.

The late 2011 Sandy Bridge 15″ MacBook Pro machines come with 1300MHz 9-9-9 non-ECC DDR3 SO-DIMM RAM configurable up to 8GB in a BTO configuration. The 2012 Ivy Bridge models come with RAM operating at 1600MHz and the Retina MacBook Pro has a 16GB BTO option. The chipsets are similar and I was pretty sure that the non-Retina model can support 16GB of RAM and the Sandy Bridge models can run at the 20% faster 1600MHz just like the Ivy Bridge ones.

I have a confession. When .NET and C# beta came out in 1999, I was confused by the definition of struct as a “value type” allocated on the stack and class as a “reference type” allocated on the heap. We were told structs are lean and fast while classes are heavy and slow. I distinctly recall searching for every imaginable opportunity to use the struct keyword. I further recall being confused by the statement that everything in C# is passed by value and by the ref and out keywords. I used ref whenever I wanted to modify values in a formal parameter regardless of whether they were structs or classes. What I didn’t realize at the time was that ref and out are really just an explicit use of a pointer. Ref and provide a mechanism to manipulate a value type by pointer instead of manipulating a local copy. For reference types, though, using ref and out is the moral equivalent of using a pointer-to-pointer in C and it is rarely necessary or correct.

I have a confession. When .NET and C# beta came out in 1999, I was confused by the definition of struct as a “value type” allocated on the stack and class as a “reference type” allocated on the heap. We were told structs are lean and fast while classes are heavy and slow. I distinctly recall searching for every imaginable opportunity to use the struct keyword. I further recall being confused by the statement that everything in C# is passed by value and by the ref and out keywords. I used ref whenever I wanted to modify values in a formal parameter regardless of whether they were structs or classes. What I didn’t realize at the time was that ref and out are really just an explicit use of a pointer. Ref and provide a mechanism to manipulate a value type by pointer instead of manipulating a local copy. For reference types, though, using ref and out is the moral equivalent of using a pointer-to-pointer in C and it is rarely necessary or correct.